rics.performance#

Performance testing utility.

Functions

|

Format performance counter output. |

|

Format performance counter output. |

|

Get a summarized view of the best run results for each candidate/data pair. |

|

Plot the results of a performance test. |

|

Run performance tests for multiple candidate methods on collections of test data. |

|

Create a DataFrame from performance run output, adding derived values. |

Classes

|

Performance testing implementation for multiple candidates and data sets. |

- class MultiCaseTimer(candidate_method: dict[str, Callable[[DataType], Any]] | Collection[Callable[[DataType], Any]] | Callable[[DataType], Any], test_data: dict[Hashable, DataType] | Collection[DataType])[source]#

-

Performance testing implementation for multiple candidates and data sets.

For non-dict inputs, string labels will be generated automatically.

- Parameters:

candidate_method – A dict

{label: function}. Alternatively, you may pass a collection of functions or a single function.test_data – A

{label: data}to evaluate candidates on. Alternatively, you may pass a list of data.

- run(time_per_candidate: float = 6.0, repeat: int = 5, number: int | None = None, progress: bool = False) dict[str, dict[Hashable, list[float]]][source]#

Run for all cases.

Note that the test case variant data isn’t included in the expected runtime computation, so increasing the amount of test data variants (at initialization) will reduce the amount of times each candidate is evaluated.

- Parameters:

time_per_candidate – Desired runtime for each repetition per candidate label. Ignored if number is set.

repeat – Number of times to repeat for all candidates per data label.

number – Number of times to execute each test case, per repetition. Compute based on per_case_time_allocation if

None.progress – If

True, display a progress bar. Requiredtqdm.

Examples

If repeat=5 and time_per_candidate=3 for an instance with and 2 candidates, the total runtime will be approximately

5 * 3 * 2 = 30seconds.- Returns:

A dict run_results on the form

{candidate_label: {data_label: [runtime, ...]}}.- Raises:

ValueError – If the total expected runtime exceeds max_expected_runtime.

Notes

Precomputed runtime is inaccurate for functions where a single call are longer than time_per_candidate.

See also

The

timeit.Timerclass which this implementation depends on.

- format_perf_counter(start: float, end: float | None = None) str[source]#

Format performance counter output.

This function formats performance counter output based on the time elapsed. For

t < 120 sec, accuracy is increased whereas for durations above one minute a more user-friendly formatting is used.- Parameters:

start – Start time.

end – End time. Set to now if not given.

- Returns:

A formatted performance counter time.

Examples

>>> format_perf_counter(0, 309613.49) '3d 14h 0m 13s' >>> format_perf_counter(0, 0.154) '154ms' >>> format_perf_counter(0, 31.39) '31.4s'

See also

- format_seconds(t: float, *, allow_negative: bool = False) str[source]#

Format performance counter output.

This function formats performance counter output based on the time elapsed. For

t < 120 sec, accuracy is increased whereas for durations above one minute a more user-friendly formatting is used.- Parameters:

t – Time in seconds.

allow_negative – If

True, format negative t with a leading minus sign.

- Returns:

A formatted performance counter time.

- Raises:

ValueError – If

t < 0andallow_negative=False(the default).

- get_best(run_results: dict[str, dict[Hashable, list[float]]] | DataFrame, per_candidate: bool = False) DataFrame[source]#

Get a summarized view of the best run results for each candidate/data pair.

- Parameters:

run_results – Output of

rics.performance.MultiCaseTimer.run().per_candidate – If

True, show the best times for all candidate/data pairs. Otherwise, just show the best candidate per data label.

- Returns:

The best (lowest) times for each candidate/data pair.

- plot_run(run_results: dict[str, dict[Hashable, list[float]]] | DataFrame, x: Literal['candidate', 'data'] | None = None, unit: Literal['s', 'ms', 'μs', 'us', 'ns'] | None = None, **kwargs: Any) None[source]#

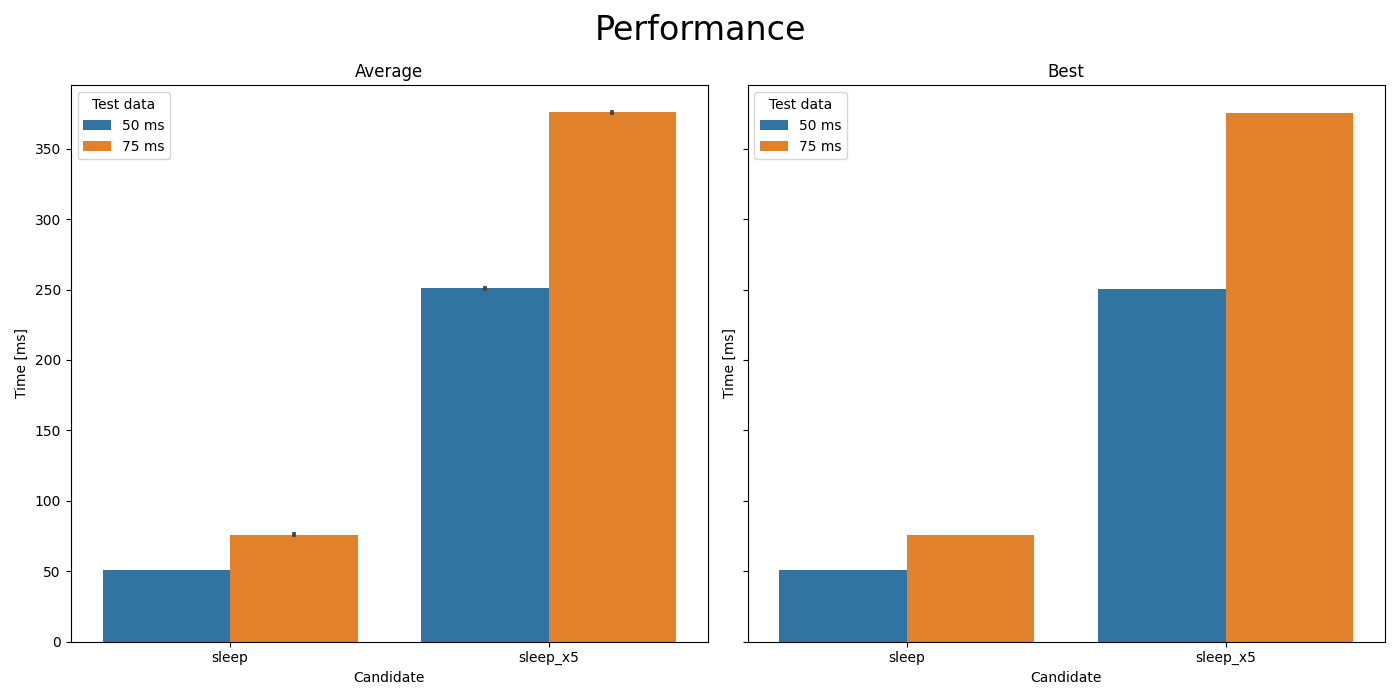

Plot the results of a performance test.

Comparison of

time.sleep(t)andtime.sleep(5*t).#- Parameters:

run_results – Output of

rics.performance.MultiCaseTimer.run().x – The value to plot on the X-axis. Default=derive.

unit – Time unit to plot on the Y-axis. Default=derive.

**kwargs – Keyword arguments for

seaborn.barplot().

- Raises:

ModuleNotFoundError – If Seaborn isn’t installed.

TypeError – For unknown unit arguments.

- run_multivariate_test(candidate_method: dict[str, Callable[[DataType], Any]] | Collection[Callable[[DataType], Any]] | Callable[[DataType], Any], test_data: dict[Hashable, DataType] | Collection[DataType], time_per_candidate: float = 6.0, plot: bool = True, **figure_kwargs: Any) DataFrame[source]#

Run performance tests for multiple candidate methods on collections of test data.

This is a convenience method which combines

MultiCaseTimer.run(),to_dataframe()and – if plotting is enabled –plot_run(). For full functionally these methods should be use directly.- Parameters:

candidate_method – A single method, collection of functions or a dict {label: function} of candidates.

test_data – A single datum, or a dict

{label: data}to evaluate candidates on.time_per_candidate – Desired runtime for each repetition per candidate label.

plot – If

True, plot a figure usingplot_run().**figure_kwargs – Keyword arguments for the :seaborn.barplot`. Ignored if

plot=False.

- Returns:

A long-format DataFrame of results.

- Raises:

ModuleNotFoundError – If Seaborn isn’t installed and

plot=True.

See also

The

plot_run()andget_best()functions.

- to_dataframe(run_results: dict[str, dict[Hashable, list[float]]], names: Iterable[str] = ()) DataFrame[source]#

Create a DataFrame from performance run output, adding derived values.

- Parameters:

run_results – Output from

rics.performance.MultiCaseTimer.run().run_results – A dict run_results on the form

{candidate_label: {data_label: [runtime, ...]}}, returned byrics.performance.MultiCaseTimer.run().names – Categories for keys in the data. If given, all data labels must be tuples of the same length as names.

- Returns:

The run_result input wrapped in a DataFrame.

Modules

Multivariate performance testing from the command line. |

|

Plotting backend for the performance framework. |

|

Types used by the framework. |